Rationale for the Symposium

Scientific computation is emerging as absolutely central to the scientific method, but the prevalence of very relaxed practices is leading to a credibility crisis affecting many scientific fields. It is impossible to verify most of the results that computational scientists present at conferences and in papers today. Reproducible computational research, in which all details of computations -- code and data -- are made conveniently available to others, is a necessary response to this crisis.

This session addresses reproducible research from three critical vantage points: the consequences of reliance on unverified code and results as a basis for clinical drug trials; groundbreaking new software tools for facilitating reproducible research and pioneered in a bioinformatics setting; and new survey results elucidating barriers scientists face in the practice of open science as well as proposed policy solutions designed to encourage open data and code sharing. A rapid transition is now under way -- visible particularly over the past two decades -- that will finish with computation as absolutely central to scientific enterprise, cutting across disciplinary boundaries and international borders and offering a new opportunity to share knowledge widely.

Speakers and Slides

Audio for the symposium is here. Warning: it's 3 hours, 139MB, and not indexed by speaker or slide. I have, however, given time markers below for each of the talks.

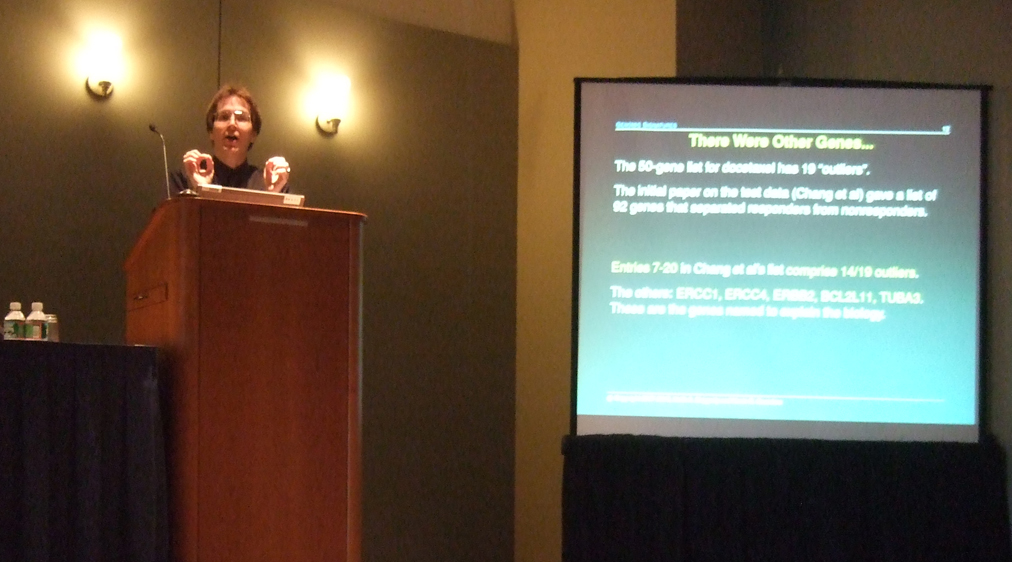

The Importance of Reproducibility in High-Throughput Biology: Case Studies (slides, 00:00 to 29:26 on the audio file)

Keith A. Baggerly, University of Texas M.D. Anderson Cancer Center

High-throughput biological assays let us ask very detailed questions about how diseases operate, and promise to let us personalize therapy. Data processing, however, is often not described well enough to allow for reproduction, leading to exercises in “forensic bioinformatics” where raw data and reported results are used to infer what the methods must have been. Unfortunately, poor documentation can shift from an inconvenience to an active danger when it obscures not just methods but errors.

In this talk, we examine several related papers using array-based signatures of drug sensitivity derived from cell lines to predict patient response. Patients in clinical trials were allocated to treatment arms based on these results. However, we show in several case studies that the reported results incorporate several simple errors that could put patients at risk. One theme that emerges is that the most common errors are simple (e.g., row or column offsets); conversely, it is our experience that the most simple errors are common. We briefly discuss steps we are taking to avoid such errors in our own investigations.

Policies for Scientific Integrity and Reproducibility: Data and Code Sharing (slides, 32:52 to 47:40 on the audio file)

Victoria C. Stodden, Columbia University

As computation emerges as central to the scientific enterprise, new modalities are necessary to ensure scientific findings are reproducible. Without access to the underlying code and data, it is all but impossible to reproducible today’s published computational scientific results. As seen in recent widely reported events, such as Climategate and the clinical trials scandal at Duke University, a lack of transparency in computational research undermines public confidence in science as well as slowing scientific progress, engendering a credibility crisis.

In order to communicate scientific discoveries and knowledge through the release of the associated code and data, scientists face issues of copyright. In this talk I address copyright as a barrier to reproducible research and present open licensing solutions for computational science. My efforts, labeled The Reproducible Research Standard, are designed to realign the communication of modern computational scientific research with longstanding scientific norms.

With transparency in scientific research, a new era of access to computational science is at hand that does not confine understanding to specialists, but permits knowledge transfer not just between disciplines but to any interested person in the world with an internet connection.

Reproducible Software versus Reproducible Research (slides, extended abstract, 53:25 to 1:10:47 on the audio file)

As an active participant of both the scientific research and the open-source

software development communities, I have observed that the latter often lives

up better than the former to our ideals of scientific openness and

reproducibility. I will explore the reasons behind this, and I will argue that

these problems are particularly acute in computational domains where they

should be in fact less prevalent.

Open source software development uses public fora for most discussion and

systems for sharing code and data that are, in practice, powerful provenance

tracking systems. There is a strong culture of public disclosure, tracking and

fixing of bugs, and development often includes exhaustive automatic validation

systems, that are executed automatically whenever changes are made to the

software and whose output is publicly available on the internet. This helps

with early detection of problems, mitigates their reoccurrence, and ensures

that the state and quality of the software is a known qunatity under a wide

variety of situations (operating systems, inputs, parameter ranges, etc).

Additionally, the very systems that are used for sharing the code track the

authorship of contributions. All of this ensures that open collaboration does

not dilute the merit or recognition of any individual developer, and allows for

a meritocracy of contributors to develop while enabling highly effective

collaboration.

In sharp contrast we have incentives in computational research, strongly biased

towards rapid publication of papers without any realistic requirement of

validation, that lead to a completely different outcome. Publications in

computationally-based research (applied to any specific discipline) often lack

any realistic hope of being reproduced, as the code behind them is not

available, or if it is it rarely has any automated validation, history

tracking, bug database, etc.

I will discuss how we can draw specific lessons from the open source community

both in terms of technical approaches and of changing the structure of

incentives, to make progress towards a more solid base for reproducible

computational research.

GenePattern (slides, 1:16:36 to 1:32:33 on the audio file)

The rapid increase in biological data acquisition has made computational analysis essential to research

in the life sciences. However, the myriad of software tools to analyze this data were developed in

diverse settings, without the capability to interact with one another or to capture the information

necessary to reproduce an analysis. The burden of maintaining analytical provenance is therefore

placed on the individual scientist. As a consequence, publications in biomedical research usually do not

contain sufficient information for reproduction of the presented results. To alleviate these problems,

we created a computational genomics environment called GenePattern which tracks the steps in

the analysis of genomic data. Recently, in collaboration with Microsoft, we linked GenePattern to

Microsoft Word. This resulting combination provides a Reproducible Research System that enables

users to link analytical tools into workflows, to automatically record their work, to transparently embed

that *recording* into a publication without ever leaving their word processing environment, and

importantly to allow exact reproduction of published results.

Strategies for Reproducible Research (slides, 1:35:40 to 1:53:12 on the audio file)

I will discuss some practical considerations for engaging in

the practice of reproducible research. These will consider different

users (individual investigators, research groups and whole departments

or organizations) as well as different uses (publication, internal

communication, documentation of approaches that were tried).

A Universal Identifier for Computational Results (slides, 2:01:18 to 2:28:03 on the audio file)

We propose that scientific publications recognize the primacy of

computational results -- figures,tables, and charts -- and

follow a protocol we have developed which asks publishers for subtle,

easy changes in article appearance,

asks authors for simple easy changes in a few lines of code in their

programs and word processors, and

yet has very far-reaching and we think lasting consequences.

The effect of these small changes will be the following.

Each author would permanently register each computational result

in a published article (figure, table, computed number in in-line text)

with a unique universal result identifier (URI). Each figure or table

appearing in a published article would have its URI clearly indicated

next to that item: a string that permanently and uniquely identifies

that computational result.

In our proposal, an archive, run by the publisher under the standard

client/server architecture described here, will respond to queries

about the URI and provide: (a) the figure/table itself; (b) metadata

about the figure's creation; (c) (with permissions) data from the

figure/table itself; (d) (with permissions) a related figure/table,

obtained by changing the underlying parameters that created the

original figure, but keeping everything else about the figure's

creation the same.

To better evoke the whole package we propose -- which consists of

URI's, of specific content associated to URI's, and specific server

processes that record and serve up content, we call the whole package

-- a URI, its associated content and server behaviors -- a Verifable

Computational Result (VCR).

Our talk will describe an existing implementation of this idea and the

advantages to journals, scientists, and government agencies of this approach.

Lessons for Reproducible Science from the DARPA Speech and Language Program (slides, 2:35:30 to 3:07:12 on the audio file)

Since 1987, DARPA has organized most of its speech and language research in terms of formal, quantitative evaluation of computational solutions to well-defined "common task" problems. What began as an attempt to ensure against fraud turned out to be an extraordinarily effective way to foster technical communication and to explore a complex space of problems and solutions. This engineering experience offers some useful (if partial) models for reproducible science, especially in the area of data publication; and it also suggests that the most important effects may be in lowering barriers to entry and in increasing the speed of scientific communication.

links associated with Mark's talk:

Fernando Perez, University of California, Berkeley

Michael Reich, Broad Institute of MIT and Harvard

Robert Gentleman, Bioinformatics & Computational Biology, Genentech, Inc.

David Donoho, Stanford University; Matan Gavish, Stanford University

Mark Liberman, University of Pennsylvania